Table of Contents

Need a compliance guide for the Cyber Resilience Act (CRA)? Here’s a 15-step roadmap to prepare you for the EU’s most important cybersecurity regulation for products with digital elements.

This guide has been developed to support entities whose products are affected by the CRA’s obligations in their compliance journey, providing them with a clear and practical resource for navigating the complex requirements of the regulation. You’ll find explanations and practical advice, each time backed up by the official text of the Cyber Resilience Act, which will be quoted several times.

This compliance guide is primarily aimed at those directly involved in digital product security, such as security managers, Chief Technology Officers (CTOs) and Chief Product Officers (CPOs), architects and product teams, as well as legal teams and GRC (Governance, Risk and Compliance) managers.

In short, all those who, in the course of their work, are responsible, at one time or another, for the digital security of regulated products placed on the European market.

You can continue reading this guide as an article, or download an enhanced PDF version below for easier reading.

Cyber Resilience Act (CRA): Step-By-Step Guide to Compliance

A 80-page compliance guide to walk security managers and legal departments through the 21 essential requirements of the CRA. No mumbo jumbo, just useful, actionable information.

A Compliance Guide Intended to Be Up-To-Date and Sustainable

This guide also aims to provide an up-to-date reading of the CRA, as close as possible to the final text. The Cyber Resilience Act has undergone numerous revisions over the course of its legislative journey, and the multitude of resources available on the subject, the oldest of which are often obsolete, can make it difficult to understand and apply the law.

This publication is therefore based on the most recent version of the CRA to date, i.e. the text adopted on first reading by the European Parliament on March 12, 2024. It is available on the European Parliament website, and here in English in PDF format.

We have deemed this document to be reliable as it’s highly unlikely that major changes — if any — will be introduced to the regulation before it is officially adopted. We therefore hope to offer a guide that is as useful and durable as possible.

It should also be stressed that this document is the result of our own work, and cannot replace the necessary due diligence required of entities concerned, nor the enlightenment of a qualified legal expert. In other words, it’s up to you to ensure your own compliance, and this document shouldn’t be considered as anything more than what it is: an exhaustive and rigorous guide, but with no legal value whatsoever.

In the first few chapters, we’ll cover a few general aspects, such as the nature and objectives of the Cyber Resilience Act, its effective date and the fines incurred by offenders. If you’re already familiar with these points, you can skip ahead to Chapter 1 (you can use the scroll bar on the right).

What Is the Cyber Resilience Act (CRA)?

Let’s start with a reminder, which can do no harm. The Cyber Resilience Act (CRA), also known as Regulation (EU) 2022/0272, is a piece of European Union legislation that governs the cybersecurity of products with digital elements distributed on its territory.

This is a major text in terms of European digital resilience, which directly complements other legislative spearheads such as the IA Act (Artificial Intelligence Act) or the NIS2 Directive — for which we have also written a comprehensive compliance guide.

NIS2 Directive: Step-by-Step Guide to Compliance

A 40-page guide to walk CISOs, DPOs and legal departments through the directive. No mumbo jumbo, only useful and actionable insights.

The CRA represents a major turning point, insofar as it officializes the responsibility of manufacturers, and in some cases importers and distributors, for the digital security of the products they put on the market. They now have no choice but to think about and guarantee the digital security of their products throughout their entire lifecycle, from the earliest stages of design to the end of the support period.

The Objectives of the CRA

The aim of the CRA is obviously to protect consumers, but also to strengthen the Union’s overall level of resilience. Making digital products more secure also means reducing the risks for all users of these products, whether they are private individuals or key entities such as those regulated by NIS2 — hospitals, banks, drinking water production plants, postal services and so on.

To this end, the Cyber Resilience Act establishes:

- Rules for making products with digital elements available on the market, in order to guarantee their cybersecurity;

- Essential requirements for the design, development and production of these products;

- Essential requirements for the vulnerability management processes put in place by manufacturers;

- Rules and provisions for market surveillance and enforcement.

The CRA is, of course, mandatory, and compliance with it is a prerequisite for CE marking of regulated products, as well as for their distribution on the European market. It should also be stressed that the CRA is not a toothless regulation, but that it comes with coercitive measures such as heavy fines.

A Defensive Vision of Cybersecurity, in Line With the Cybersecurity Act

Before going any further, we feel it is important to clarify the notion of “cybersecurity” as defined by the CRA.

Article 3 of the Cyber Resilience Act specifies that the term “cybersecurity” is understood in the same sense as in Article 2 of Regulation 2019/881, which is none other than the Cybersecurity Act (CSA), i.e.:

“‘Cybersecurity’ means the activities necessary to protect network and information systems, the users of such systems, and other persons affected by cyber threats.” – Cybersecurity Act, Article 2

The CRA therefore adopts a defensive definition of cybersecurity: it’s about defending products, protecting them from potential threats. So keep in mind that “cybersecurity” will hereafter be synonymous with defensive posture — even if offensive approaches such as penetration testing will play their part, notably to assess product security and the effectiveness of defensive measures.

And What About Digital Resilience?

Some may be surprised by this semantic choice, at a time when the term “digital resilience” is flourishing in various European legislations, from the Cyber Resilience Act (CRA) to the Digital Operational Resilience Act (DORA) governing the financial sector.

Indeed, resilience is a concept that goes far beyond the simple protection of assets. It’s not just about defense, but also about resistance and business continuity, even under heavy fire. But it’s important to understand that, unlike NIS2 or DORA, the CRA regulates products, not entities. This distinction is fundamental.

We can expect a bank or a nuclear power plant to be resilient, i.e. capable of ensuring the continuity of its activities and services even in the event of an attack — which is why it is useful to have a DRP and a BCP — in order to spare the European Union from disastrous systemic effects. But the same cannot reasonably be expected of a smartwatch or a baby monitor.

On the other hand, we can expect an IoT product to be operational so as to fulfill its function, and not to be compromised so as not to endanger its users. From the attacker’s point of view, compromising a smart device is never an end in itself, but always a way of reaching the target: the user, whether a private individual or a critical organization.

By improving the security of products with digital elements, the CRA contributes to strengthening the digital resilience of all users, and thus of the entire European ecosystem. With the Cyber Resilience Act, defensive cybersecurity is at the service of digital resilience.

When Will the CRA Come into Force?

Here, we need to refer to Article 71 of the regulation to find the answer. The Cyber Resilience Act will come into force 3 years (36 months) after its publication in the Official Journal of the European Union.

Adoption and publication of the CRA is expected in the course of 2024, resulting in a compliance deadline of 2027 for regulated products. It should be remembered that the CRA is a European regulation, and as such will be applicable as it stands in all member states (unlike a directive, such as NIS2, which must be transposed into the national law of each country).

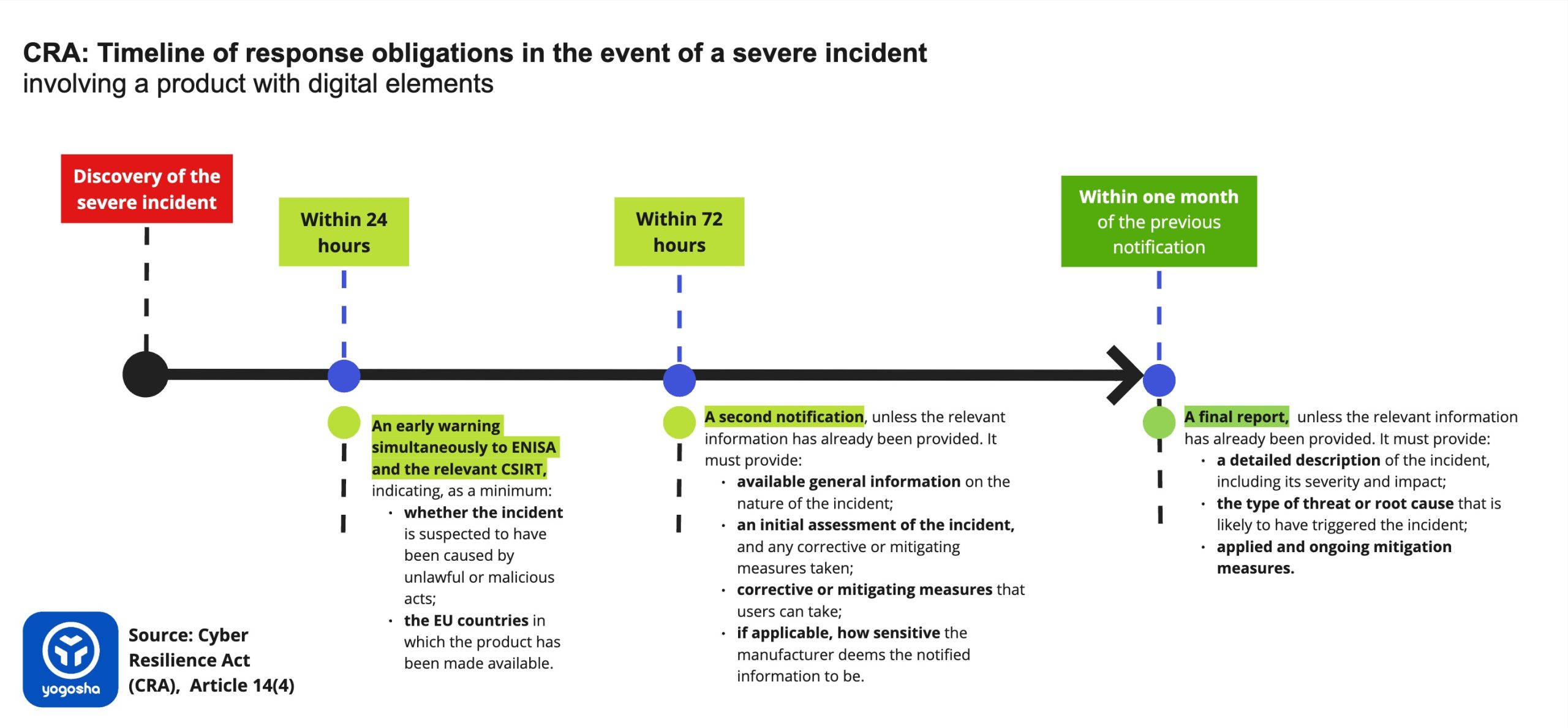

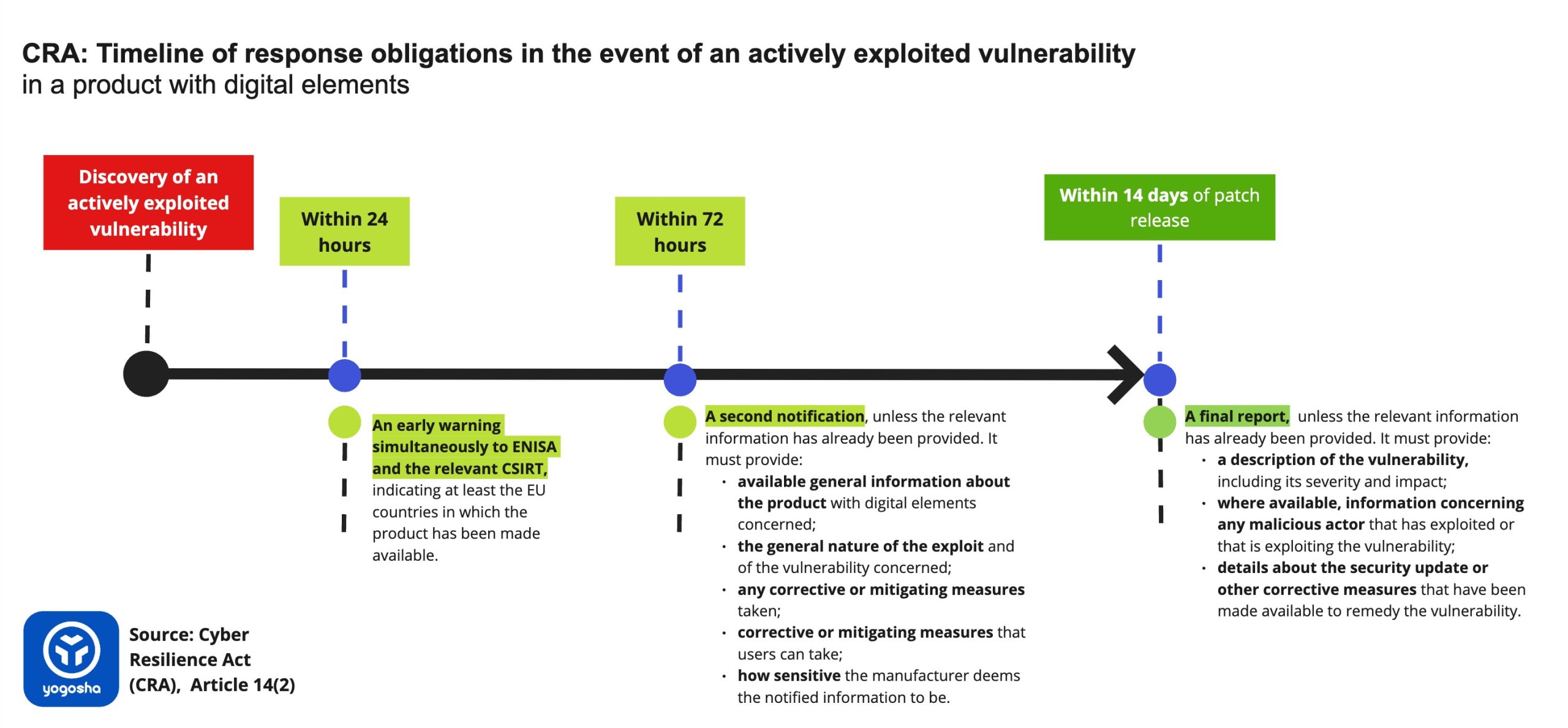

Mandatory Notification of Incidents and Exploited Vulnerabilities as of 2026

There are two exceptions to this three-year deadline, clearly set out in Article 71 and recital 127.

Firstly, the application date is set at 21 months for the obligations to report severe incidents and actively exploited vulnerabilities — two concepts we’ll come back to later — to ENISA (European Union Agency for Cyber Security) and national CSIRTs (Computer Security Incident Response Teams). With the CRA scheduled for 2024, notifications to the authorities will be mandatory from 2026.

The second exception applies to the provisions concerning the notification of conformity assessment bodies, which will come into effect 18 months after publication of the CRA. We won’t dwell on this point, as it concerns auditors rather than manufacturers.

Penalties and Fines Applicable Under the Cyber Resilience Act

The Cyber Resilience Act intends to enforce compliance, so it comes with penalties for offenders. And as always, the best deterrent is to hit where it hurts: the wallet. Article 64 of the CRA provides for a number of administrative fines, in addition to any other corrective or restrictive measures decided by the market surveillance authorities. They can also order the withdrawal of a product from the market if this is necessary for security reasons.

Up to €15M or 2.5% of Worldwide Sales

The amount of the fine depends mainly on two factors: the organization at fault, and the nature of the non-compliance. A start-up will not be penalized in the same way as a multinational, and a product security-related failure will be more heavily sanctioned than an administrative one. It’s all quite logical.

All the compliance elements below may seem rather vague if you’re not (yet) familiar with the regulation, but rest assured, we’ll be explaining them throughout this guide.

Failure to comply with the essential requirements of the regulation, with incident and vulnerability notification obligations, or with any other obligations incumbent upon them, manufacturers are liable to a fine of up to 15 million euros or 2.5% of total worldwide annual turnover for the previous financial year, whichever is higher.

Authorized representatives, importers, distributors, assessment bodies and their subcontractors who breach their obligations are liable to fines of up to €10 million or 2% of total worldwide annual turnover, whichever is higher.

This second scale also applies to all players when non-compliance concerns obligations relating to the EU declaration of conformity, technical documentation, CE marking rules, conformity assessment or access to data and documentation.

Finally, the provision of inaccurate, incomplete or misleading information to notified bodies or market surveillance authorities may be subject to a fine of up to €5 million or 1% of total worldwide annual turnover, whichever is higher.

The Exceptions: Open-Source Software, Microenterprises and SMEs

Article 64.10 of the Cyber Resilience Act notes two exceptions to these sanction regimes.

Firstly, manufacturers considered to be micro or small enterprises cannot be financially sanctioned for failure to meet notification deadlines for severe incidents and actively exploited vulnerabilities (see Chapter 10).

Secondly, open-source software stewards cannot be subject to financial penalties, regardless of the violation of the Cyber Resilience Act.

Having said all this, we can now move on to the heart of this guide, namely the different steps to be taken to achieve compliance.

1. Check if Your Products Are Regulated by the Cyber Resilience Act

As a manufacturer, the first thing to do is to find out which products are regulated by the Cyber Resilience Act. In this respect, Article 2 couldn’t be clearer:

“This Regulation applies to products with digital elements made available on the market, the intended purpose or reasonably foreseeable use of which includes a direct or indirect logical or physical data connection to a device or network.” – CRA, Article 2.1

Yes, the CRA concerns a lot of products; that’s exactly why it’s a major piece of cybersecurity legislation. And if you still have any hope of escaping it, Article 3 defines “products with digital elements” — which will be shortened to “products” in the rest of this guide.

“‘Product with digital elements’ means a software or hardware product and its remote data processing solutions, including software or hardware components being placed on the market separately.” – CRA, Article 3

This definition is important, as it confirms that all software solutions that go hand in hand with a regulated product also fall within the scope of the CRA. SaaS platforms and other mobile apps accompanying IoT products are therefore subject to the same obligations, as, for example, those associated with smart watches and scales. Conversely, a SaaS platform that isn’t linked to any product is not covered by the CRA (although it may be regulated by the NIS2 directive).

The List of Exceptions

Article 2 lists some rare exceptions, which should be borne in mind. The following are excluded from the scope of the CRA:

- Professional medical devices covered by regulations (EU) 2017/745 and (EU) 2017/746;

- Motor vehicles and their trailers, and their systems, components and separate technical units, covered by regulation (EU) 2019/2144;

- Civil aviation systems and marine equipment, respectively governed by regulations (EU) 2018/1139 and 2014/90/EU;

- Digital elements developed or modified exclusively for security or national defense purposes.

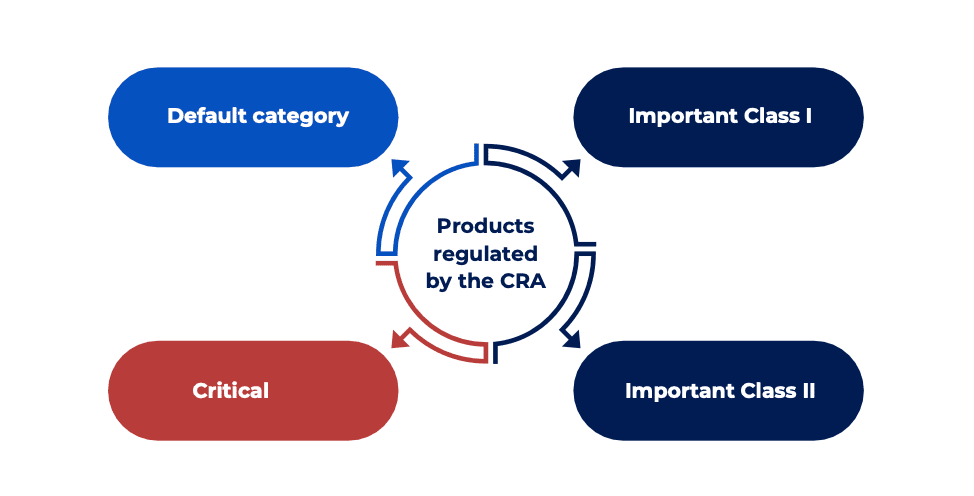

The Four Product Categories Regulated by the CRA

The Cyber Resilience Act differentiates four categories among the products it regulates:

- The default category, which is not mentioned as such, but exists de facto. It includes all products that meet the general definitions given above;

- Important products of Class I;

- Important products of Class II;

- Critical products.

It’s very important to understand this classification, as it determines, among other things, the conformity assessment procedure required for each product. The more critical the product, the more rigorous the assessment will be — meaning the involvement of a third-party auditor.

Important Products

Important products are divided into two classes: Class I and Class II, both of which are detailed in Annex III of the regulation, which we’ll examine below.

According to Article 7 of the CRA, a product is considered important if it meets at least one of the following two criteria:

- The product primarily performs functions critical to the cybersecurity of other products, networks or services, including securing authentication and access, intrusion prevention and detection, endpoint security or network protection;

- The product performs a function which carries a significant risk of adverse effects in terms of its intensity and ability to disrupt, control or cause damage to a large number of other products or to the health, security or safety of its users through direct manipulation, such as a central system function, including network management, configuration control, virtualisation or processing of personal data.

Obviously, it’s not up to manufacturers to decide whether their products are important or not. The CRA drew up an exhaustive, albeit general, list. Also, Article 7 requires the European Commission to specify the technical descriptions of important and critical product categories within 12 months of publication of the regulation.

It should also be noted that the European Commission can modify Annex III as it sees fit, and thus add, delete or move a product category from one class to another. In such cases, the general rule is to observe a 12-month transition period before the new rules apply.

Finally, the CRA points out that a product which integrates an important product is not automatically important in itself, and is therefore not necessarily subject to the same conformity assessment obligations.

List of Important Products of Class I

Important Class I products are detailed in Annex III of the CRA. The exact list is reproduced below.

Important products falling under Class I are:

- Identity management systems and privileged access management software and hardware, including authentication and access control readers, including biometric readers;

- Standalone and embedded browsers;

- Password managers;

- Software that searches for, removes, or quarantines malicious software;

- Products with digital elements with the function of virtual private network (VPN);

- Network management systems;

- Security information and event management (SIEM) systems;

- Boot managers;

- Public key infrastructure and digital certificate issuance software;

- Physical and virtual network interfaces;

- Operating systems;

- Routers, modems intended for the connection to the internet, and switches;

- Microprocessors with security-related functionalities;

- Microcontrollers with security-related functionalities;

- Application specific integrated circuits (ASIC) and field-programmable gate arrays (FPGA) with security-related functionalities;

- Smart home general purpose virtual assistants;

- Smart home products with security functionalities, including smart door locks, security cameras, baby monitoring systems and alarm systems;

- Internet connected toys covered by Directive 2009/48/EC that have social interactive features (e.g. speaking or filming) or that have location tracking features;

- Personal wearable products to be worn or placed on a human body that have a health monitoring (such as tracking) purpose or personal wearable products that are intended for the use by and for children. (Editor’s note: excluding professional medical devices covered by regulations (EU) 2017/745 and (EU) 2017/746).

List of Important Products of Class II

Important products falling under Class II are:

- Hypervisors and container runtime systems that support virtualized execution of operating systems and similar environments;

- Firewalls, intrusion detection and/or prevention systems;

- Tamper-resistant microprocessors;

- Tamper-resistant microcontrollers;

Critical Products

The critical product classification is the highest of all those established by the CRA. Unsurprisingly, products belonging to the designated categories are those subject to the strictest conformity assessment obligations.

To find out what makes a product critical, we must refer to Article 8 of the CRA. It states that a product category may be considered critical if, in addition to the criteria relating to important products, it appears as such with regard to the following two assessments:

- The extent to which there is a critical dependency of essential entities referred to in Article 3 of Directive (EU) 2022/2555 — aka NIS2 — on the category of products with digital elements;

- The extent to which incidents and exploited vulnerabilities concerning the category of products with digital elements can lead to serious disruptions to critical supply chains across the internal market.

Behind these somewhat cryptic formulations lie two obsessions of the NIS2 Directive:

- To protect essential entities, those whose failure could have systemic effects within the EU, such as players in the transport, energy or health sectors. If you’d like to find out more about Essential Entities (EE), please refer to the relevant section of our NIS2 compliance guide;

- To secure the supply chain, those service providers who, when digitally fragile, represent a major attack vector against the entities that work with them. If you’re not familiar with the subject, we suggest you take a look at the cyber attack on SolarWinds, which has become a textbook case of supply chain attacks.

Understandably, the products deemed critical by the CRA are those that pose a risk to entities vital to the proper functioning of the Union, or that are likely to jeopardize the most important supply chains.

List of Critical Products

It should be noted here that the list below is not set in stone, since, as with important products, the European Commission is entitled to amend it as it sees fit by means of delegated acts. In this case, the general rule is to observe a 6-month transition period before applying the new rules.

Critical products are listed in Annex IV of the CRA:

- Smartcards or similar devices, including secure elements;

- Hardware Devices with Security Boxes (Editor’s note: products such as secure smart card readers, tachographs, Hardware Security Modules (HSM), etc.);

- Smart meter gateways within smart metering systems as defined in Article 2.23 of Directive (EU) 2019/944 and other devices for advanced security purposes, including for secure cryptoprocessing.

The last point being rather obscure, we’d like to make a few clarifications. The Directive (EU) 2019/944 referred to is a European legislation of June 5, 2019 regarding common rules for the internal electricity market. It defines an “intelligent metering system” as follows:

“‘Smart metering system’ means an electronic system that is capable of measuring electricity fed into the grid or electricity consumed from the grid, providing more information than a conventional meter, and that is capable of transmitting and receiving data for information, monitoring and control purposes, using a form of electronic communication.” – Directive (EU) 2019/944 , Article 2.23

2. Learn About the 21 Essential Requirements for Cybersecurity

We’re tackling a big chunk of the regulation here; if you want to pour yourself a cup of coffee, now’s the time.

The Cyber Resilience Act lays down essential requirements — an euphemism for obligations — for all regulated products and their manufacturers. They are detailed in Annex I of the regulation, which will be quoted regularly hereafter.

The essential requirements of the CRA fall into two groups:

- Product cybersecurity requirements. These cover the level of security and the intrinsic characteristics of the products;

- Vulnerability handling requirements. These cover the measures and processes implemented by manufacturers.

Please bear in mind that these requirements are absolutely crucial. They are at the heart of the Cyber Resilience Act, and their implementation will determine whether a product is considered compliant or not. In fact, Article 6 of the CRA states that only products that meet ALL these requirements can be placed on the European market.

Therefore, implementing these requirements should be your roadmap to Cyber Resilience Act compliance, your light in the night when you feel lost in the face of all there is to accomplish.

These requirements also underpin the document you’re currently reading. In this chapter, we’ll list them as they are set out in the regulation, then come back to each of them in greater detail as the guide progresses. Remember that the CRA is a legal text, not a technical specification manual, so some requirements may appear somewhat vague or generic on first reading.

The 13 CRA Requirements for Product Cybersecurity

The first part of the CRA’s essential requirements is devoted to product properties, which must guarantee a solid degree of intrinsic security. The text is crystal clear:

“Products with digital elements shall be designed, developed and produced in such a way that they ensure an appropriate level of cybersecurity based on the risks.” – CRA, Annex I

This means that IoT products need to be secure with regard to the risks associated with them. These risks are to be determined by a cyber risk assessment (see Chapter 5), to be carried out by the manufacturer at the design stage.

Products must meet the following 13 essential cybersecurity requirements (Source: Annex I, Part 1):

- Be made available on the market without known exploitable vulnerabilities;

- Be made available on the market with a secure by default configuration, unless otherwise agreed between manufacturer and business user in relation to a tailor-made product, including the possibility to reset the product to its original state;

- Ensure that vulnerabilities can be addressed through security updates, including, where applicable, through automatic security updates that are installed within an appropriate timeframe enabled as a default setting, with a clear and easy-to-use opt-out mechanism, through the notification of available updates to users, and the option to temporarily postpone them;

- Ensure protection from unauthorized access by appropriate control mechanisms, including but not limited to authentication, identity or access management systems, as well as report on possible unauthorized access;

- Protect the confidentiality of stored, transmitted or otherwise processed data, personal or other, such as by encrypting relevant data at rest or in transit by state of the art mechanisms, and by using other technical means;

- Protect the integrity of stored, transmitted or otherwise processed data, personal or other, commands, programs and configuration against any manipulation or modification not authorized by the user, as well as report on corruptions;

- Process only data, personal or other, that are adequate, relevant and limited to what is necessary in relation to the intended purpose of the product (‘minimisation of data’);

- Protect the availability of essential and basic functions, also after an incident, including with resilience and mitigation measures against denial-of-service attacks;

- Minimize the negative impact by themselves or connected devices on the availability of services provided by other devices or networks;

- Be designed, developed and produced to limit attack surfaces, including external interfaces;

- Be designed, developed and produced to reduce the impact of an incident using appropriate exploitation mitigation mechanisms and techniques;

- Provide security related information by recording and/or monitoring relevant internal activity, including the access to or modification of data, services or functions, with an opt-out mechanism for the user;

- Provide the possibility for users to securely and easily remove on a permanent basis all data and settings and, where such data can be transferred to other products or systems, ensure this is done in a secure manner.

The 8 CRA Requirements for Vulnerability Handling

The second part of the CRA’s essential requirements covers manufacturers’ obligations regarding vulnerability management. These are just as important as the requirements applicable to products, and their implementation is also a prerequisite for placing a product on the market.

Manufacturers of a product regulated by the CRA must meet the following 8 vulnerability management requirements (Source: Appendix I, Part 2):

- Identify and document vulnerabilities and components contained in the product, including by drawing up a software bill of materials in a commonly used and machine-readable format covering at the very least the top-level dependencies of the product;

- In relation to the risks posed to the products with digital elements, address and remediate vulnerabilities without delay, including by providing security updates. Where technically feasible, new security updates shall be provided separately from functionality updates;

- Apply effective and regular tests and reviews of the security of the product with digital elements;

- Once a security update has been made available, share and publicly disclose information about fixed vulnerabilities, including a description of the vulnerabilities, information allowing users to identify the product affected, the impacts of the vulnerabilities, their severity and clear and accessible information helping users to remediate the vulnerabilities. In duly justified cases, where manufacturers consider the security risks of publication to outweigh the security benefits, they may delay making public information regarding a fixed vulnerability until after users have been given the possibility to apply the relevant patch;

- Put in place and enforce a policy on coordinated vulnerability disclosure (CVD);

- Take measures to facilitate the sharing of information about potential vulnerabilities in their product as well as in third party components contained in that product, including by providing a contact address for the reporting of the vulnerabilities discovered in the product;

- Provide for mechanisms to securely distribute updates for products to ensure that vulnerabilities are fixed or mitigated in a timely manner, and, where applicable for security updates, in an automatic manner;

- Ensure that available security updates are disseminated without delay and, unless otherwise agreed between manufacturer and business user in relation to a tailor-made product, free of charge, accompanied by advisory messages providing users with the relevant information, including on potential action to be taken.

3. Be Aware of Special Cases: Importers, Distributors and Open Source Software

The above 21 requirements apply to manufacturers of products regulated by the Cyber Resilience Act. But the regulation also addresses blind spots, specifying the obligations applicable to importers, distributors and open-source software stewards. If these particular cases do not concern you, you can skip ahead to the next Chapter.

Importers’ Obligations

The regulation devotes its entire Article 19 to the obligations of importers. Obviously, they are absolutely forbidden to place on the market products that do not comply with the 13 essential cybersecurity requirements, or whose manufacturers do not apply the 8 essential vulnerability management requirements. The application of all these requirements remains the responsibility of manufacturers.

Before placing products on the market, the importer must ensure that:

- The conformity assessment procedures have been carried out by the manufacturer;

- The manufacturer has drawn up the technical documentation;

- The product bears the CE marking, and is accompanied by the EU declaration of conformity and the information and instructions to the user;

- The product bears a type, batch or serial number or any other element enabling it to be identified. Where this isn’t possible, this information must be provided on the packaging or in a document accompanying the product;

- Both the manufacturer AND the importer have indicated their name, company name or registered trademark, as well as the postal, email or website address at which they can be contacted respectively. This information must appear on the product, its packaging or an accompanying document;

- The manufacturer has ensured that the end date of the support period, in particular the month and year, is specified at the time of purchase, in a clear, comprehensible and easily accessible manner and, as the case may be, on the product, its packaging or by digital means.

Importers must be able to provide all the necessary documents proving compliance with the above requirements. In addition, if an importer becomes aware of a vulnerability in a product, they must of course inform the relevant authorities.

Distributors’ Obligations

For the obligations incumbent on distributors, we must turn to Article 20. As with importers, the main task is to ensure that paperwork is in order. Distributors must therefore “act with due diligence”, checking that the product bears the CE mark and that the manufacturer and importer have complied with all the points in the above list.

White-Label Products

Noteworthy exception: an importer or distributor is considered a manufacturer when it places a product on the market “under its name or trademark”, or carries out “a substantial modification” of a product already available. (Article 21) All the obligations incumbent on manufacturers then apply.

The same goes for any natural or legal person other than the manufacturer, importer or distributor who makes a substantial modification to a product and makes it available on the European market. (Article 22)

Open Source Software

Open source software was a major issue throughout the whole process of drafting the Cyber Resilience Act, prompting numerous outcries from open source players.

The first versions of the regulation directly threatened the model by making creators liable, so much so that the community feared a “chilling effect” on the development of software — free or not, the latter often depending on the former. There is no such thing as free software on the one hand, and licensed software on the other: the two coexist to form the software ecosystem we know today, in a kind of symbiosis that benefits everyone.

It was such a cause for concern that in April 2023, the Eclipse Foundation — whose members include big players such as Google, IBM, Oracle and Microsoft — published an open letter to the European Commission, co-signed by numerous organizations such as the Linux Foundation Europe and the French CNLL.

Fortunately, the European Commission has consulted with open source representatives, and the final version seems to satisfy everyone.

What Open Source Softwares Are Regulated by the CRA?

First of all, let’s explain what open source software is according to the CRA:

“‘Free and open-source software’ means software the source code of which is openly shared and which is made available under a free and open-source license which provides for all rights to make it freely accessible, usable, modifiable and redistributable.” – CRA, Article 3.48

Let’s then look at Recital 18, which states that “only free and open-source software made available on the market, and therefore supplied for distribution or use in the course of a commercial activity, should fall within the scope of this Regulation.” This clearly indicates that free software that isn’t part of a commercial activity is not affected by the CRA.

But what constitutes a commercial activity according to the regulation? Here, Recital 15 provides a most welcome clarification.

Supply in the course of a commercial activity might be characterized by:

- Charging a price for a product;

- Charging a price for technical support services where this does not serve only the recuperation of actual costs;

- An intention to monetise, for instance by providing a software platform through which the manufacturer monetises other services;

- Requiring as a condition for use the processing of personal data for reasons other than exclusively for improving the security, compatibility or interoperability of the software;

- Accepting donations exceeding the costs associated with the design, development and provision of the product.

If the software meets one of these criteria, it falls within the scope of the Cyber Resilience Act. On the other hand, the following elements alone are not sufficient to characterize a commercial activity:

- “Accepting donations without the intention of making a profit.” (Recital 15);

- Supply “as part of the delivery of a service for which a fee is charged solely to recover the actual costs directly related to the operation of that service, such as may be the case with certain products by public administration entities.” (Recital 16);

- “The provision of products with digital elements qualifying as free and open-source software that are not monetised by their manufacturers.” (Recital 18);

- “The mere fact that an open-source software product receives financial support from manufacturers or that manufacturers contribute to the development of such a product.” (Recital 18);

- “The mere presence of regular releases” (Recital 18);

- The development by NGOs, “provided that the organization is set up in such a way that ensures that all earnings after costs are used to achieve not-for-profit objectives.” (Recital 18).

Finally, the CRA states that the following are not considered as making a product available on the market:

- The supply of an open source product intended for integration by other manufacturers into their own products UNLESS “the component is monetized by its original manufacturer”. (Recital 18) So it’s perfectly possible to distribute open source software without having to worry about it being monetized by someone else.

- “The sole act of hosting products on open repositories, including through package managers or on collaboration platforms.” (Recital 20) So it’s possible to aggregate and make available other open-source projects without being considered a distributor — unless there’s a commercial intent, of course.

The Status of Open-Source Software Steward

Now, what about organizations that develop and maintain free software for commercial purposes? The CRA decided to create a separate status for them, that of open-source software steward, defined as follows:

“‘Open-source software steward’ means a legal person, other than a manufacturer, that has the purpose or objective of systematically providing support on a sustained basis for the development of specific products with digital elements, qualifying as free and open-source software and intended for commercial activities, and that ensures the viability of those products.” – CRA, Article 3.14

Without a doubt, it’s the major foundations and structures of the open source community, such as the Linux, Mozilla and Eclipse foundations, and their administrators who are targeted. Contributors are spared:

“This Regulation does not apply to natural or legal persons who contribute with source code to products with digital elements qualifying as free and open-source software that are not under their responsibility.” – CRA, Recital 18

As for stewards, let’s not forget that they cannot be fined for breaching the Cyber Resilience Act.

Obligations of Open Source Software Stewards

After this long but necessary digression on the particular case of open source software, it’s time to look at the specific obligations incumbent on administrators, detailed in Article 24.

Open source software stewards must implement and verifiably document a cybersecurity policy. It must encourage secure developments, as well as effective vulnerability management by developers. This policy shall “in particular, include aspects related to documenting, addressing and remediating vulnerabilities and promote the sharing of information concerning discovered vulnerabilities within the open-source community.”

This cybersecurity policy must be made available to any market surveillance authority requesting it, in order to address potential security risks. It goes without saying that the administrator’s cooperation is required throughout the process.

Furthermore, Article 24.3 states that stewards are subject to the same reporting requirements that apply to manufacturers, i.e. :

- For actively exploited vulnerabilities, as long as they are involved in the development of the products concerned;

- For severe incidents affecting networks and information systems used in the development of the affected products.

We won’t go into further detail here, as all these requirements for notifying exploited vulnerabilities and severe incidents are covered in Chapter 10 of this guide.

Finally, Article 25 of the Cyber Resilience Act paves the way for “voluntary security attestation programs” to assess the security of open source software. There is every reason to believe that these programs will be carried out jointly by the regulator and the main open source foundations.

Part of the community has already indicated that they are working to develop common cybersecurity processes for compliance with the CRA. Among the signatories are the Apache, Blender, OpenSSL, PHP, Python, Rust and Eclipse foundations – quite an impressive list.

4. Prepare a Technical Documentation for Each Product Placed on the Market

Let’s get back to business: the obligations incumbent on manufacturers of CRA-regulated products. The production of a technical documentation to accompany each product is a sine qua non condition for its commercialization in Europe. We’re just paraphrasing the official text:

“Before placing a product with digital elements on the market, manufacturers shall draw up the technical documentation referred to in Article 31.” – CRA, Article 13.12

Article 31 states that documentation must contain all information demonstrating that the product and the processes implemented by the manufacturer comply with the essential requirements of the CRA. Yes, all of it. More precisely, it must include at least all the elements listed in Annex VII (see below).

An Excellent Roadmap for Compliance

The preparation of the technical documentation is one of the last steps in the compliance process, precisely because it must contain the information needed to prove that the previous steps have been carried out properly. Nevertheless, we thought it would be a good idea to introduce it early on in this guide.

Indeed, since it lists virtually everything that needs to be done, the technical documentation provides an excellent roadmap for compliance with the CRA. We therefore recommend that you pin it above your desk, alongside the 21 essential requirements for product security and vulnerability management.

What to Include in the Technical Documentation

If you’re fond of lengthy paperwork, you’re in for a treat. The technical documentation must include at least all the elements listed in Annex VII of the CRA, i.e. :

- A general description of the product with digital elements, including:

- its intended purpose;

- versions of software affecting compliance with essential requirements;

- where the product is a hardware product, photographs or illustrations showing external features, marking and internal layout;

- user information and instructions as set out in Annex II;

- A description of the design, development and production of the product and vulnerability handling processes, including:

- necessary information on the design and development of the product, where applicable, drawings and schemes and a description of the system architecture explaining how software components build on or feed into each other and integrate into the overall processing;

- necessary information and specifications of the vulnerability handling processes put in place by the manufacturer, including the software bill of materials, the coordinated vulnerability disclosure policy, evidence of the provision of a contact address for the reporting of the vulnerabilities and a description of the technical solutions chosen for the secure distribution of updates;

- necessary information and specifications of the production and monitoring processes of the product and the validation of those processes;

- An assessment of the cybersecurity risks against which the product is designed, developed and produced, including how the essential requirements are applicable;

- Relevant information that was taken into account to determine the support period (see Chapter 13);

- A list of the harmonized standards, common specifications or European certification schemes applied ;

- If they haven’t been applied: descriptions of the solutions adopted to meet the essential requirements, including a list of other relevant technical specifications applied.

- In the event of partial application, the technical documentation shall specify the parts which have been applied;

- Reports of the tests carried out to verify the conformity of the product and of the vulnerability handling processes with the CRA’s essential requirements;

- A copy of the EU declaration of conformity;

- Where applicable, the software bill of materials, further to a request from a market surveillance authority.

This technical documentation must be kept available to market surveillance authorities for at least 10 years, or for the remainder of the support period, whichever is longer.

A simplified technical documentation for micro-enterprises and SMEs

It’s worth noting that micro-enterprises and SMEs, including startups, can produce a simplified technical documentation form, as per Article 33.5. It states that the European Commission shall specify the details of the simplified form by means of delegated acts. To be continued.

5. Conduct a Cybersecurity Risk Assessment Upstream of Product Design

As mentioned above, the 13 essential requirements of the regulation relating to products must be applied taking into account the cybersecurity risks specific to the product in question. And there’s only one way to determine these risks: carry out a cybersecurity risk assessment.

Security Throughout the Entire Product Lifecycle

The risk assessment must be carried out by the manufacturer, upstream of the product design phase. It will then be used to guide security considerations and choices throughout the product’s lifecycle.

“Manufacturers shall undertake an assessment of the cybersecurity risks associated with a product with digital elements and take the outcome of that assessment into account during the planning, design, development, production, delivery and maintenance phases of the product with a view to minimizing cybersecurity risks, preventing incidents and minimizing the impacts of such incidents, including in relation to the health and safety of users.” – CRA, Article 13.2

This idea of guaranteeing security at every phase of the product lifecycle, from the earliest stages of design to the end of the support period, is at the heart of the Cyber Resilience Act. Manufacturers now have a duty to ensure that their products are always secure, and not just when they are put on the market. Because it is the raison d’être of all the essential requirements of the regulation, ongoing product security must be a central concern for all security managers involved.

Elements to Be Included in the Cybersecurity Risk Assessment

Article 13 stipulates that the assessment must include, as a minimum, a cybersecurity risk analysis based on:

- The intended purpose and reasonably foreseeable use;

- The conditions of use of the product, such as the operational environment or the assets to be protected;

- And take into account the length of time the product is expected to be in use.

It must also clearly indicate:

- How the essential product requirements apply to the product concerned, and how they are implemented;

- How the manufacturer will ensure that the product is designed, developed and produced in such a way as to guarantee an appropriate level of cybersecurity;

- How the manufacturer will apply the essential requirements for vulnerability handling.

A Due Diligence Obligation if the Product Integrates Third-Party Components, Including Open-Source Components

The CRA requires manufacturers to “exercise due diligence” if their products incorporate third-party components, including open-source ones. This means assessing the risks intrinsic to each component, as well as those posed by the way they are integrated into the product.

“Manufacturers shall exercise due diligence when integrating components sourced from third parties so that those components do not compromise the cybersecurity of the product with digital elements, including when integrating components of free and open-source software that have not been made available on the market in the course of a commercial activity.” – CRA, Article 13.5

The regulation sets out that manufacturers must notify the entity or person owning the third-party component if they discover a vulnerability in it – which is the bare minimum of politeness. The vulnerability must then be dealt with and remediated in accordance with the essential requirements for vulnerability handling (Article 13.6). We believe that this is a tricky situation, since the owner of the third-party component will most often be the one responsible for correcting the vulnerability.

In our opinion, the wisest thing to do is to avoid, wherever possible, integrating third-party components that include an identified vulnerability; all the more so as the CRA makes no distinction between vulnerabilities that represent a real security risk and those that would have no impact. Nevertheless, it should be pointed out that if the manufacturer decides to release a software or hardware patch, they are obliged to share its content with the owner of the third-party component.

Finally, be aware that the risk assessment must be included in the technical documentation, as well as being documented and updated throughout the product support period (see Chapter 13).

6. Bring Security Into Every Stage of the Product Creation Process

Once the risk assessment has been carried out, it’s time to move on to the real thing: product creation. Anyone who works in cybersecurity or product development knows where we’re going with this: the most important thing is security by design. Basically, this means implementing the 13 essential cybersecurity requirements for products by integrating security milestones at every stage of the lifecycle.

Moving From the General Requirements of the CRA to Harmonized Standards

First and foremost, you have to specify the technical solutions that will enable you to meet the essential requirements. The CRA is a piece of European legislation, not a technical document written by and for engineers. The essential requirements are never more than that; the CRA lists objectives to be achieved, but never says how they are to be achieved.

Let’s take the example of the second requirement applicable to products, namely that they should “be made available on the market with a secure default configuration.” Everyone can understand this clear, general objective, but it’s a different kettle of fish to make sure it’s actually achieved.

Transposing these general regulatory requirements into technical requirements with which manufacturers can comply is the role of European harmonized standards. These are a set of guidelines drawn up by standardization bodies, providing technical specifications whose use proves that products and services achieve a certain level of quality, security and reliability.

Harmonized standards exist for all sorts of things, and it’s only a matter of time before those recommended for the CRA emerge. However, there are already numerous cybersecurity standards and best practices whose application can only be beneficial. European harmonized standards will standardize what already exists and fill in the gaps, but some efforts are already clearly identified, provided we know where to look.

Mirror Essential Requirements With Technical Specifications

Until specific harmonized standards are available, one of the best documents available today is the Cyber Resilience Act Requirements Standards Mapping (2024), published by the Joint Research Centre (JRC) and the European Union Agency for Cyber Security (ENISA).

This valuable document maps each of the CRA’s essential requirements to one or more existing standards. The primary aim of this publication is to contribute to the development of harmonized standards by identifying what already exists, but it can also be useful to manufacturers wishing to adopt cybersecurity best practices now.

Let’s take the example of the seventh essential requirement relating to products: “process only data, personal or other, that are adequate, relevant and limited to what is necessary in relation to the intended purpose of the product.”

Here, the JRC and ENISA document highlights the value of ISO/IEC 27701, an extension of ISO/IEC 27001 and ISO/IEC 27002 for privacy information management. Although not product-specific, ISO 27701 offers a useful mapping between various standards and legislation such as the GDPR, in addition to properly covering the concept of data minimization. It will therefore be useful to manufacturers with regard to the aforementioned essential requirement.

This is just one example, but the Standards Mapping offers interesting food for thought for each of the essential requirements of the regulation, both in terms of product cybersecurity and vulnerability management — even if, quite often, the authors’ observation is that what exists is too theoretical, and therefore insufficient, and that future harmonized standards will have to flesh out the expected technical specifications.

In any case, we highly recommend this read to anyone involved in product security issues, be they engineers, developers, product managers, CTOs, CPOs or others.

Plan Technical Specifications for Each Phase of the Product Lifecycle

Each essential requirement can be met by appropriate technical specifications, which can be planned for a specific phase of the product lifecycle. Clearly defining which requirements and specifications need to be addressed at which stage will help you organize your tasks and those of your teams more efficiently.

The first thing to do is to define precisely the stages in your product’s lifecycle. They may vary from one product to another, but some general breakdowns offer a solid basis for further work. For example, Article 13 of the CRA breaks down the lifecycle into six phases: planning, design, development, production, delivery and maintenance.

Personally, we prefer the approach suggested in the Cyber Resilience Act Requirements Standards Mapping, where the authors decompose the lifecycle of a product as follows:

- Design;

- Implementation;

- Validation;

- Commissioning;

- Surveillance/Maintenance;

- End of Life.

This breakdown is particularly appropriate, since it considers the end of the product’s life as part of the cycle; a wise choice, since the CRA’s security requirements must be applied from beginning to end.

Once the lifecycle phases and technical specifications to be implemented have been defined, all that’s left to do is match them up to get an overview of what needs to be done and when — yes, that’s easier said than done. Bear in mind, however, that you’ll have to wait for the harmonized standards to get the job done properly.

7. Run “Effective and Regular” Security Testing

While it is essential (and mandatory) to consider security throughout the entire lifecycle, it’s just as important to test the actual security of products.

Cybersecurity can’t just be theoretical: you have to make sure that everything you’ve put in place is actually effective. In other words, you need to introduce security milestones throughout the product lifecycle to ensure that there are no vulnerabilities that could be exploited by a malicious actor.

Regular and effective testing is an obligation for manufacturers, as it is the third essential requirement for vulnerability handling.

“Manufacturers shall apply effective and regular tests and reviews of the security of the product with digital elements.” – CRA, Annex I, Part 2.

Besides, some conformity assessment modules, such as Module H, to which we’ll return later, call for a detailed description of the tests “carried out before, during and after production, and the frequency with which they will be carried out”. So it’s not enough just to conduct security tests: you need to draw up and document a coherent, comprehensive and continuous testing strategy.

Why Carry out Security Testing?

Beyond their mandatory nature, tests are an evidence to ensure a minimum level of cybersecurity. Any product with digital elements inevitably contains vulnerabilities. Testing is there to detect and classify them.

Some vulnerabilities can be extremely difficult to identify, while others will be obvious to any tester. Similarly, the exploit of some vulnerabilities can have dramatic consequences, while others will have no impact on the actual security of the product. After all, a vulnerability may concern a feature that cannot be exploited, or that cannot affect the security or usability of the product. Suppose a vulnerability in a smart clock only allows the color of the digital display to be changed from blue to red: it’s annoying, but it’s not the hack of the century…

It’s rather odd to note that this notion of the impact of exploitable vulnerabilities is absent from the Cyber Resilience Act; this is all the more regrettable given that some cybersecurity players had already pointed out this inconsistency in the first versions of the regulation. The French Alliance pour la Confiance Numérique (ACN, “Alliance for Digital Confidence”), for example, was already lamenting in early 2023 that “an exploitable vulnerability is by no means an absolute or binary concept”. Let’s hope that the bridges between the CRA and certification schemes introduced by the Cybersecurity Act, which themselves make this distinction, and which we’ll discuss in the next chapter, will erase this lack of nuance.

In any case, security testing is imperative because, beyond detection, it enables manufacturers to assess the criticality and potential impact of vulnerabilities on the product and its users. A vulnerability successfully exploited by a malicious actor can not only compromise everyone’s security, but also have consequences for brand image and business. As a manufacturer, it is therefore essential to do everything in your power to reduce the risks.

Which Security Tests to Choose?

There is no single form of testing that is sufficient to verify everything. The tests to be implemented depend on the objective being pursued and, above all, on the phase in the product’s lifecycle. Cybersecurity tests are numerous and eclectic, with different objectives and at different stages. It’s the combination of the various tests that makes it possible to build a solid testing strategy.

For example, during the software development phase, it is advisable to set up source code reviews, unit testing, integration testing or end-to-end testing. These take place upstream of production, during the Software Development Life Cycle (SDLC), as part of a DevSecOps approach. They do not specifically seek to identify vulnerabilities, but rather problems in general, not only in terms of security, but also compatibility or logic. In the wrong hands, these can lead to exploitable and dangerous vulnerabilities (or simply affect the quality and proper functioning of the product).

Other forms of testing are specifically designed to detect vulnerabilities proactively. They can be automatic, such as vulnerability scanning, or manual, such as penetration testing. They are part of what is more generally known as Offensive Security, or OffSec.

The Role of Offensive Security in Test Strategy

Offensive Security is a proactive approach to cybersecurity: it doesn’t seek to protect products, but to identify their vulnerabilities before malicious actors do. OffSec testing simulates attacks by adopting the posture and methods of cyber-attackers, in order to test the defenses of organizations and their products, identify potential weaknesses, and assess their actual level of security. To put it more simply: Offensive Security enables manufacturers to challenge their own products in order to identify and correct vulnerabilities.

OffSec enables the testing of almost any type of asset, from cloud infrastructures to web and mobile apps, and all forms of hardware products, whether consumer or industrial.

It’s no coincidence that we’re telling you all this, but because we, Yogosha, specialize in Offensive Security Testing.

Pentest as a Service (PtaaS)

Penetration testing is a technique used to simulate a cyber-attack on an asset, in this case a product, in order to identify its vulnerabilities. It’s a proven technique that security teams are already familiar with, and it’s imperative to use it to test regulated products before bringing them to market.

Yogosha offers an innovative approach to penetration testing: Pentest as a Service (PtaaS). An ideal solution for all organizations that need to carry out multiple tests throughout the year, on numerous products and scopes.

Pentest as a Service (PtaaS) allows for:

- Continuous security testing, which can be staggered throughout the product lifecycle, both before and after market launch, to ensure robust, ongoing security in line with the essential requirements of the CRA;

- Direct access to the Yogosha Strike Force (YSF), a community of over 1,000 certified security experts specializing in different types of assets and technologies;

- Digitization and industrialization of testing activities through a European OffSec platform available as SaaS, or self-hosted for players with the most stringent security requirements.

Adopt a Continuous Approach to Security Testing

The CRA calls for continuous product security throughout the whole lifecycle. At Yogosha, we live by this philosophy on a daily basis.

Historically, security testing has always been a one-off affair: a pentest before production, then nothing for months or even years. But the punctual nature of traditional security tests means that they’re no longer suited to meeting the security challenges faced by today’s manufacturers. While they are useful for identifying vulnerabilities at a given point in time, they are by their very nature inadequate for dealing with the fluctuating nature of cyberthreats.

The digital ecosystem is dynamic, product updates are numerous and regular, new vulnerabilities appear every day, and malicious actors are constantly renewing their approaches. One-off testing offers only limited visibility, exposing companies to the potential risks that can arise between tests.

For CRA-regulated manufacturers with growing security needs, the answer lies elsewhere than in traditional approaches. They need to be able to handle Agile projects, numerous and regular deliveries, business imperatives… They need an approach to penetration testing that is flexible, scalable, streamlined and continuous.

With Pentest as a Service, Yogosha offers on-demand penetration testing, which can be easily scheduled and repeated throughout the product lifecycle. This not only complements in-house capabilities, but also ensures a constant, vigilant eye on the security of regulated products. This is a serious advantage, given that regular security testing is one of the essential requirements of the CRA.

Find Skilled Security Experts

Setting up security tests raises another issue: finding qualified experts to carry them out. Here, manufacturers have two options.

Firstly, they can hire in-house human resources. This option has many advantages, but unfortunately there’s a worldwide shortage of cybersecurity talent. Forbes estimated the number of vacancies in the sector at 3.5 million at the beginning of 2023, an increase of 350% in less than 10 years. Talent is scarce and expensive.

Secondly, turn to a specialized service provider. This is the solution favored by many companies, who choose to outsource security testing to a service provider, usually an auditing firm. But a firm’s expertise is always limited to that of its own employees, and their numbers. Even the most talented pentester won’t be familiar with all types of assets and technologies, it’s impossible. When choosing the service provider who will carry out the tests, it is therefore essential to ensure that they have the in-house skills to match the specificities of the scope to be tested.

Faced with these two challenges — finding qualified professionals or ensuring that the service provider has the necessary in-house skills — we offer a unique solution: the Yogosha Strike Force.

The Yogosha Strike Force, 1000+ Screened Security Researchers

The Yogosha Strike Force (YSF) is a private, selective community of over 1,000 international security researchers:

- Specialized in finding the most critical vulnerabilities by simulating sophisticated hacker attacks.

- Experts in multiple asset types — Web and Mobile Apps, IOT, Cloud, Networks, APIs, Infrastructure…

- Holders of recognized cybersecurity certifications — OSCP, OSEP, OSWE, OSEE, GXPN, GCPN, eWPTXv2, PNPT, CISSP…

We select only the most talented researchers: only 10% of applicants are accepted, after passing technical and redactional exams. Identity and background checks are also carried out.

Through the Yogosha Strike Force, manufacturers falling under the Cyber Resilience Act have access to:

- A large number of carefully selected international security experts;

- An unrivaled range of skills to address all types of products and technologies.

“Through Yogosha, we’ve managed to find very talented people.” — Éric Vautier, CISO, Groupe ADP (Paris’ Airports)

Yogosha, an Offensive Security Platform Available as SaaS or Self-Hosted

Security testing involves a certain amount of sensitive data, such as information on potentially exploitable vulnerabilities. It is therefore essential to choose a reliable and solid test provider. Here, it’s up to regulated organizations to investigate the quality of each provider.

For our part, we offer several types of deployment of our Offensive Security platform to best meet the security requirements of every organization, including the most sensitive.

The Yogosha platform is available:

- SaaS: a turnkey solution, hosted via 3DS Outscale and its SecNumCloud-certified sovereign cloud (the highest French security standard, established by the French CERT). Data is hosted on French soil.

- Self-Hosted: a solution designed for organizations with the most stringent security requirements. You’re free to host the Yogosha platform wherever you like — private cloud, on premises — to retain total control over your data and the execution context.

In both cases, the intrinsic robustness of our product is at the heart of our concerns. We continually secure our assets through a DevSecOps pipeline, OWASP guidelines, recurrent penetration testing and an ongoing bug bounty program. In addition, Yogosha is in the process of obtaining ISO 27001 certification — something that will probably be completed by the time you read this.

Bug Bounty, an Approach Recommended by the Cyber Resilience Act

Bug Bounty is another approach to Offensive Security, referred to in Recital 77 of the CRA. It enables manufacturers to face up to the reality of the field by mobilizing numerous security researchers to test all or part of their products or systems.

Bug Bounty is a bug hunt based on a pay-per-result logic. Organizations pay monetary rewards — so-called bounties — to researchers for each valid vulnerability they manage to identify. The more critical the vulnerability, the higher the bounty. If the researchers find nothing, the organization spends nothing.

We won’t dwell on Bug Bounty here, as it’s an important element of the Coordinated Vulnerability Disclosure (CVD) policy, which is one of the essential requirements of the CRA and the subject of Chapter 11 of this guide.

However, let’s state right now that Yogosha, as a specialist in Offensive Security, does of course offer bug bounty programs. In fact, this is one of our core activities, as we’re the only entirely private European platform to offer bug bounty programs, and have been doing so since 2015.

8. Obtain Cybersecurity Certification for Your Products

While testing helps to reduce risk by identifying product vulnerabilities, certification attests to an overall high level of security. Obtaining cybersecurity certification means obtaining proof that the product is as secure as possible.

Indeed, certification involves an in-depth assessment of the product’s security and the processes put in place by its manufacturer. If all goes well, certification is the reward for a job well done.

Why Get Cybersecurity Certification?

Achieving certification takes time, money and commitment from the teams involved. Some may wonder whether it’s worth the effort — spoiler: yes.

The benefits of certification are many, and not just in terms of security. In a competitive environment, certification can be a strong differentiating factor between products. Customers may be more inclined to choose a certified product over one that isn’t, because they’ll have the assurance that it meets high security standards. If cybersecurity considerations are not (yet) part of the main choice criteria for the average person, they can be absolutely paramount if the customers happen to be organizations, all the more so if they are regulated by the NIS2 Directive.

With regard to the CRA in particular, obtaining cybersecurity certification has one major advantage: it dispenses with third-party conformity assessment procedures — under certain conditions, of course.

Products Certified Under a European Scheme Are Presumed Compliant With CRA Requirements

First and foremost, we need to talk briefly about the Cybersecurity Act (CSA). This is another major EU regulation adopted in 2019, which among other things introduced an EU-wide cybersecurity certification framework for ICT products, services and processes. The objective was to guarantee an adequate level of cybersecurity and harmonize assessments, so that companies operating in the EU can certify their products once and for all with a certificate recognized throughout the territory.

As you might guess, we’re not telling you this for your general knowledge. As a matter of fact, the Cyber Resilience Act provides that products certified according to a European certification scheme established under the Cybersecurity Act are presumed to comply with the essential requirements of the CRA, provided they have been assessed during the certification process.

“Products with digital elements and processes put in place by the manufacturer for which an EU statement of conformity or certificate has been issued under a European cybersecurity certification scheme adopted pursuant to Regulation (EU) 2019/881 [Editor’s note: the Cybersecurity Act], shall be presumed to be in conformity with the essential requirements set out in Annex I in so far as the EU statement of conformity or European cybersecurity certificate, or parts thereof, cover those requirements.” – CRA, Article 27.8

The regulation clearly states that only certifications issued as part of a European scheme under the Cybersecurity Act can constitute proof of conformity.

Furthermore, certification to an assurance level of at least “substantial” dispenses with the obligation to carry out a third-party conformity assessment, otherwise mandatory for certain product categories — more on this later.

“Furthermore, the issuance of a European cybersecurity certificate issued under such schemes, at least at assurance level ‘substantial’, eliminates the obligation of a manufacturer to carry out a third-party conformity assessment for the corresponding requirements.” – CRA, Article 27.9

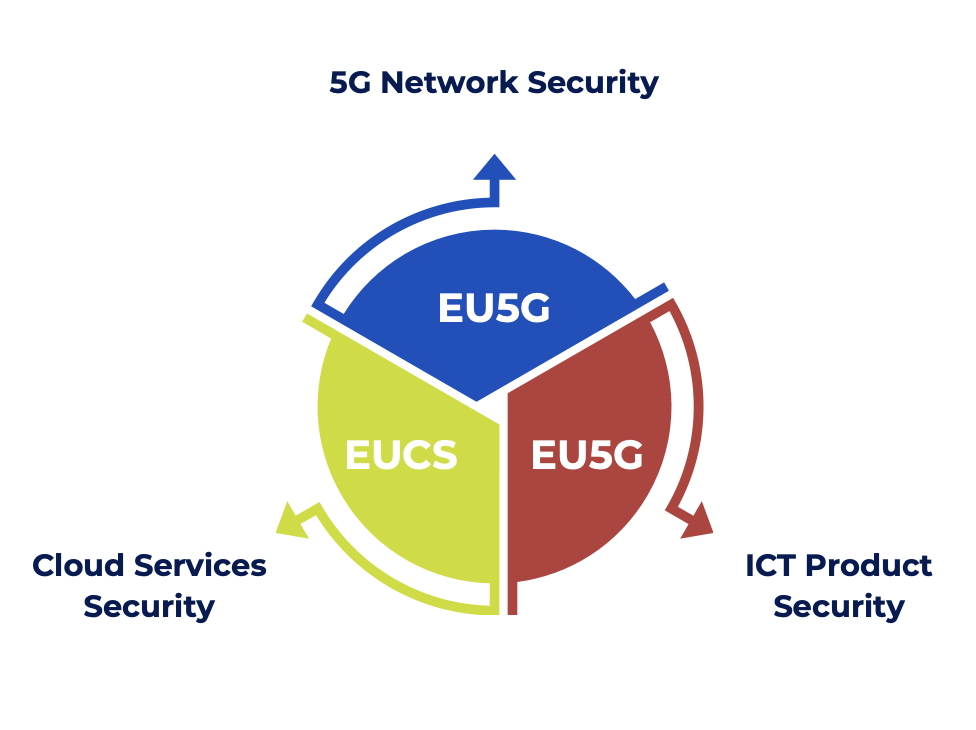

European Certification Schemes Introduced by the Cybersecurity Act (CSA)

The Cybersecurity Act introduced three European certification schemes, the development of which was entrusted to ENISA:

- The EU5G scheme for 5G network security;

- The EUCS scheme for the security of cloud services;

- The EUCC scheme for ICT products (hardware, software and components).

It’s the latter scheme that is of particular interest to us in the context of the CRA.

The EUCC Scheme: Harmonized Certification Rules for ICT Products

The EUCC (EU Common Criteria) scheme is the first — and so far only — of the three frameworks to have been officially adopted by the European Commission, on January 31, 2024. It provides a set of harmonized rules and procedures for certifying ICT products, both hardware and software, the very ones regulated by the Cyber Resilience Act.

The EUCC framework is based on the Common Criteria (a set of standards for evaluating IT systems), and takes its inspiration from the various existing national frameworks that were brought together under SOG-IS, a European agreement for the mutual recognition of certificates issued by a national authority, such as the ANSSI in France. This same institution has also confirmed that “existing SOG-IS certificates can be re-evaluated as EUCC certificates as soon as the new requirements are met” (Source), so we can assume that the same will apply in other countries.

We won’t go into further detail on the content of the EUCC scheme, as the topic is so rich that it could easily be the subject of another guide. However, if you are interested in learning more, here are a few key documents:

- The implementing act for the EUCC scheme published in the Official Journal of the EU;

- A set of texts on the state of the art for the EUCC scheme, published by ENISA. You’ll find numerous documents on subjects such as accreditation of conformity assessment bodies, or the technical aspects of assessing smartcards or hardware devices with security boxes, two critical product categories under the CRA.

It should be noted that the first EUCC certificates can be issued from February 2025, one year after publication of the scheme.

Which Products Are Subject to Mandatory Certification?

Products deemed critical to the CRA may be subject to mandatory certification, but not by default. In fact, Article 8 gives the European Commission the right to decide, through delegated acts, which categories of critical products are required to obtain European cybersecurity certification at a level of assurance that is at least “substantial”. Here again, the extent to which the entities regulated by the NIS2 Directive are dependent on the products in question will be a determining factor.

This obligation is only materialized by such a delegated act; in its absence, critical products are not required to undergo certification. They are then subject to the same conformity assessment procedure as important Class II products, to which we’ll return in the next chapter.

In the case of a delegated act which introduces a certification obligation for a category of critical products, the general rule is to observe a 6-month transition period before the new rules apply, unless a security imperative justifies faster application.

9. Determine the Conformity Assessment Procedure to Be Applied for Each Product

Implementation of the essential requirements of the Cyber Resilience Act is mandatory, and manufacturers will have to demonstrate their commitment to security before placing regulated products on the market. This means they’ll have to provide proof of compliance, in the form of an assessment.

The CRA establishes different conformity assessment procedures, more or less stringent depending on the product’s level of risk. The first level consists of self-assessment by manufacturers, while advanced procedures require the intervention of a third-party assessment body, or cybersecurity certification.

Four Assessment Procedures Based on Different Modules

Article 32 of the CRA introduces four conformity assessment procedures, each based on one or more modules:

- Assessment procedure 1: Module A

- Assessment procedure 2: Module B + Module C

- Assessment procedure 3: Module H

- Assessment procedure 4: Certification under a European scheme issued pursuant to the Cybersecurity Act, a topic we discussed in the previous chapter.

Manufacturers can use any of these procedures, with the exception of important or critical products which cannot benefit from the first.

The modules in question are:

- Module A: Conformity Assessment procedure based on internal control;

- Module B: EU-type examination;

- Module C: Conformity to type based on internal production control;

- Module H: Conformity based on full quality assurance.

Module A consists of self-assessment by the manufacturer, while modules B + C involve the intervention of an independent certification body. Module H calls for the application of an appropriate quality system approved by a third-party organization.

We won’t detail the exact content of each module in this guide, as all the relevant information is clearly set out in Annex VIII of the Cyber Resilience Act.

Nevertheless, you should be aware that, whatever the module, the documentation required is substantial. None of the four is an easy task, and all will require organization and discipline in the management of the compliance project.

The General Case: Self-Assessment

Where products are neither important nor critical, manufacturers can opt for self-assessment, which is the first assessment procedure (Module A). This is the simplest of all the methods set out in the regulation.

In this case, the manufacturer assures and declares under his sole responsibility that the products meet all the essential cybersecurity requirements, and that he has complied with the essential requirements for vulnerability handling. He must also draw up the technical documentation and the EU declaration of conformity, and affix the CE marking to the product.

Manufacturers who can benefit from self-assessment but wish to apply a stricter procedure are naturally free to do so.

Evaluation of Important and Critical Products

Important products (classes I and II) can only be evaluated using procedures 2, 3 or 4. It is therefore compulsory to involve an independent assessment body, or to obtain appropriate cybersecurity certification (see next section). Self-assessment is not an option.